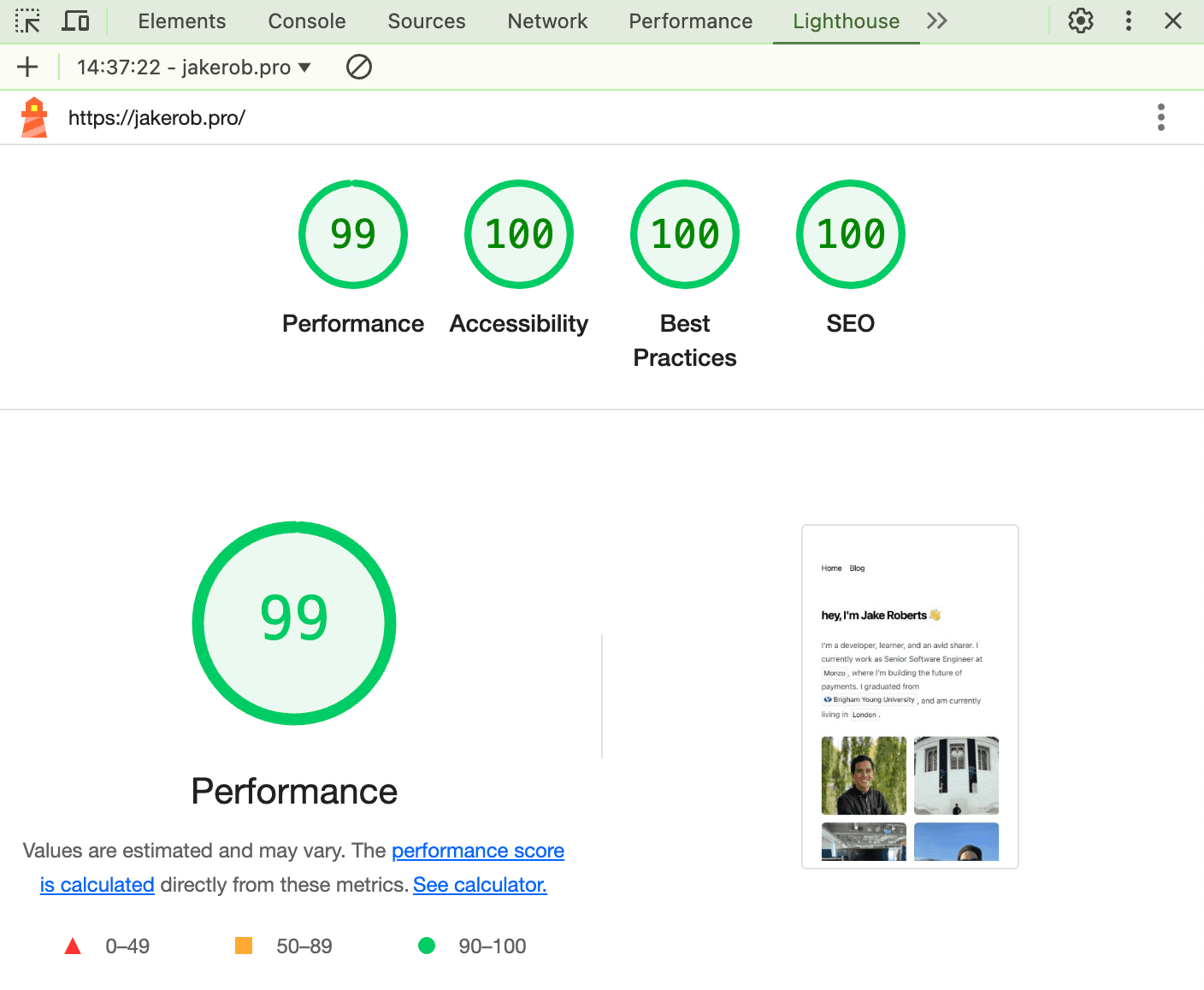

I have a problem, I want to self host a website on my homelab, but I also want to give my users the best possible user experience, while maintaining privacy and security.

Take this blog for example, it's mine, and I own the content on it, however, the reason you're getting a good experience is because it is served through Vercel.

Imagine if I was actually self-hosting this! I live in London. If I self-hosted this, and you lived in Singapore,

you would have to add 175ms to every single network request!

And the waterfall effects would be crazy, it would be a terrible experience.

Vercel mitigates this problem by serving the content close to you! They do it in a variety of different ways. The static assets (CSS, JS, etc.) are all served quickly from their global CDN.

Try this out with keyCDN's performance test.

If the cache is warm, you'll see consistent TTFB under ~50ms worldwide. (Click the "Test" button a couple times to warm it up).

And when you fetch the initial HTML file, it is served from either their nearest edge compute for static content or the origin server (washington DC) for dynamic content.

All this to say, Vercel gives a massive performance boost for customers that are globally distributed.

While self-hosters don't necessarily care about this in the same way, having a good experience is super important, and it's directly connected to how quickly you can download content (Time to First Byte).

Using Vercel (or another cloud service) has a couple problems for the self-hosting crowd.

-

Inherent trust

Because we are asking them to manage all the deployment process for us, we are trusting them with our data. They can view and read everything that we do on a site deployed with them.

Encryption (https) is done with a TLS certificate that is held by the server that terminates the connection. In my example, Vercel holds the certificate, which means you and them are the only ones who can decrypt the communication required for this website to operate.

INFOThis is generally not a big deal for a business, as the tradeoffs are absolutely worth it. And distrust of Vercel/AWS or the equivalent isn't really in most people's threat model.

But for the homelab community, this could be very big! Imagine if I wanted to build a service that allowed someone to upload their important documents. For this to truly qualify for the self-hostable badge, it would need to have a proper deployment path that didn't use any cloud providers.

-

Cost

Hosting services on Vercel/AWS can be very expensive. This is not a big deal to me, as I don't have any external users on my homelab services. But if a business wanted to, they could copy this to save money.

CDN

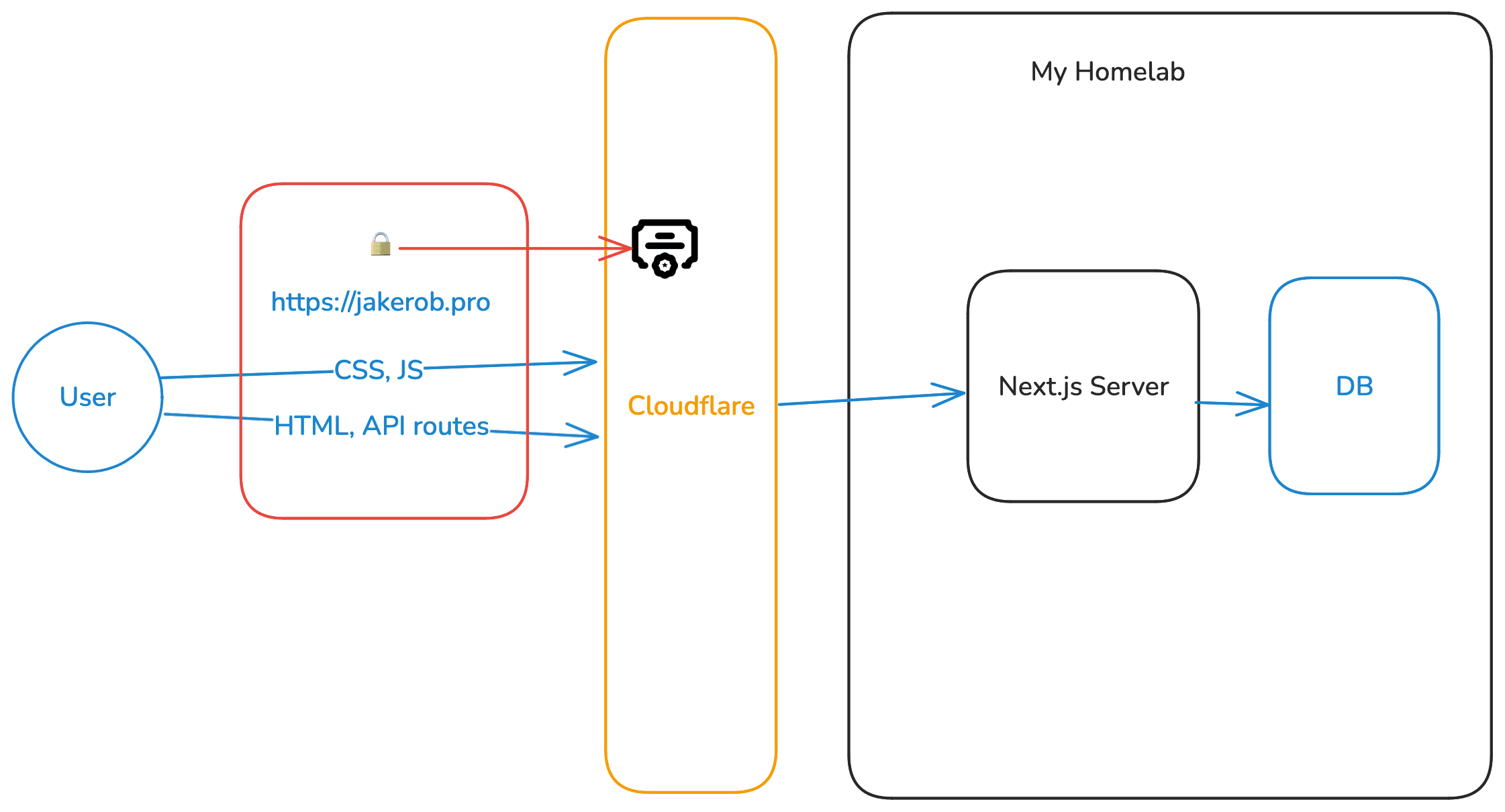

So I want to self-host this website. But I don't want to force my users to hit my server for everything!

Not all data is sensitive. I don't want to force my users to make every single request to my origin server (the one in my house). Some things are not private and can absolultely be sent to a CDN.

My CSS files, don't need to be secured for this homelab website.

This doesn't mean they shouldn't have https. Every resource needs to be behind https, but I don't care if I am the one who holds the TLS certificates.

Why not just use cloudflare tunnels/CDN?

You might be thinking, cloudflare tunnels and CDN are perfect for this use case!

And you're almost right. The CDN is great, and the free tier is perfect for a homelab.

But the problem is that it will only work for the static content. The dynamic content can't be served on the same domain, otherwise we run into the same problem 1 above – Cloudflare holds the TLS certificates.

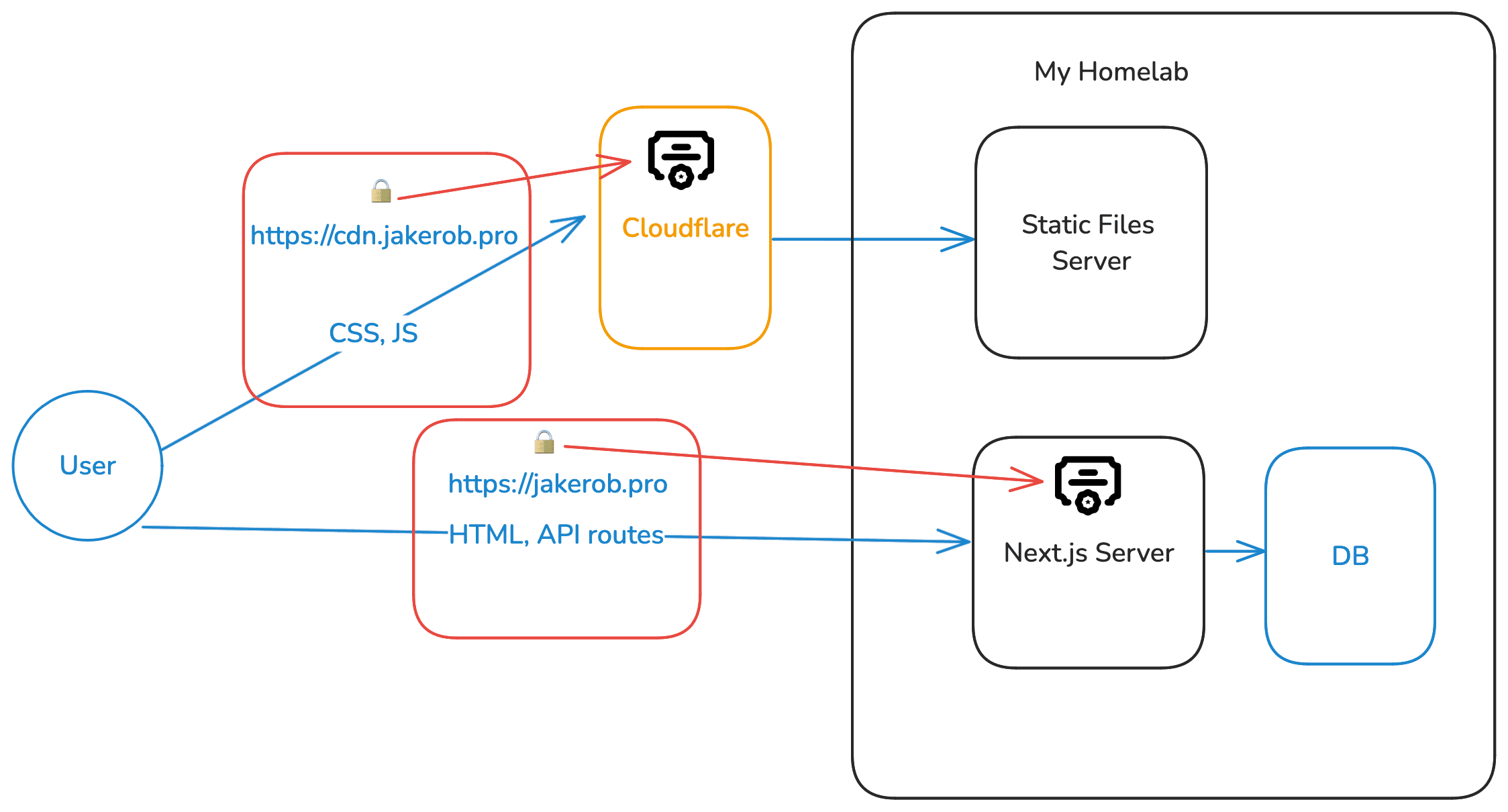

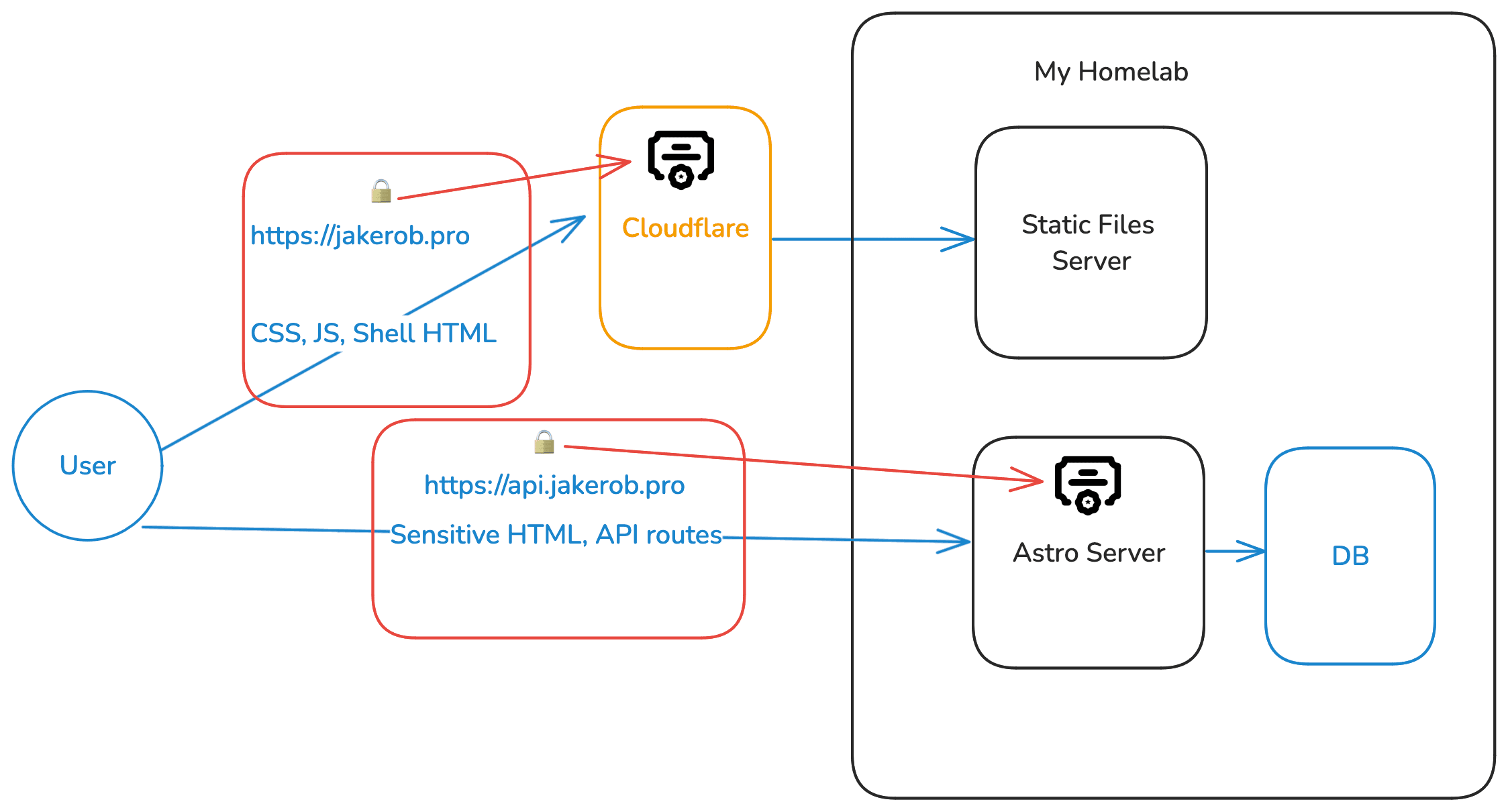

So at the very least, if we want to use a CDN, we need two separate domains! One for the public content and one for the private content. Or put another way, we need a domain for static content, and a domain for dynamic content.

Goals

So with these problems laid out, here is my wishlist / goals for a proper self-hostable stack.

- All sensitive requests must be made directly to the origin server using a https connection where I hold the TLS certificates.

- All static content can be served through a CDN

- As much as possible can be cached.

- Fine-grained reactivity. Each page can have component-level opt-in reactivity. The best example of this is a blog page should be static, but it may include a view counter that has dynamic content.

- No loading spinners everywhere. The entire page should not need to be an SPA in order to achieve JAM-stack-like features.

Solutions

There isn't a perfect framework that will easily accomplish all of this, but we can get close. And ultimately it's all about tradeoffs.

Next.js can get us 99% of the way there, but for mostly static content, we might be better off with Astro (especially with a future adapter).

Next.js

Next.js has a configuration command that lets you serve the static content from a separate domain.

The assetPrefix can be configured like this

Now all of our static assets are ready to be served from https://cdn.jakerob.pro/_next/static/chunks/4b....js

and these can be cached and are now on a separate domain.

So if I setup a server to serve static files, which will be a separate post (check this out in the meantime),

I can point cdn.jakerob.pro to that, and if I do it through cloudflare, it will properly cache the files.

And we have no privacy issue because all these files are static and public.

What's missing?

This would be perfect, I can now run my Next.js app on my self-hosted server and all requests that need the server will be handled securely, with my own TLS certificate. This seems great! And if your app is mostly dynamic (ie: most content is loaded at runtime), you're probably fine here!

What we don't get with this is the caching of HTML files. Regardless of the rendering strategy (static, SSR, ISR) the HTML must be served from the origin server.

So while a user in Asia would get my JS and CSS rapidly (through the CDN), they will not get HTML until their request comes all the way to my origin.

Partial Pre-Rendering?

Will partial pre-rendering fix the problem? So far, I don't think so. This feature is built with the idea that an edge-server close to the user can serve the first HTML, and then stream the dynamic content to the user.

It's an amazing idea and I hope it takes off and becomes the new norm. However, it's not quite right for the homelab usecase.

It works when I've tried it, but the existing problem remains, HTML still has to be handled by the origin server, meaning users far away still get a slow experience.

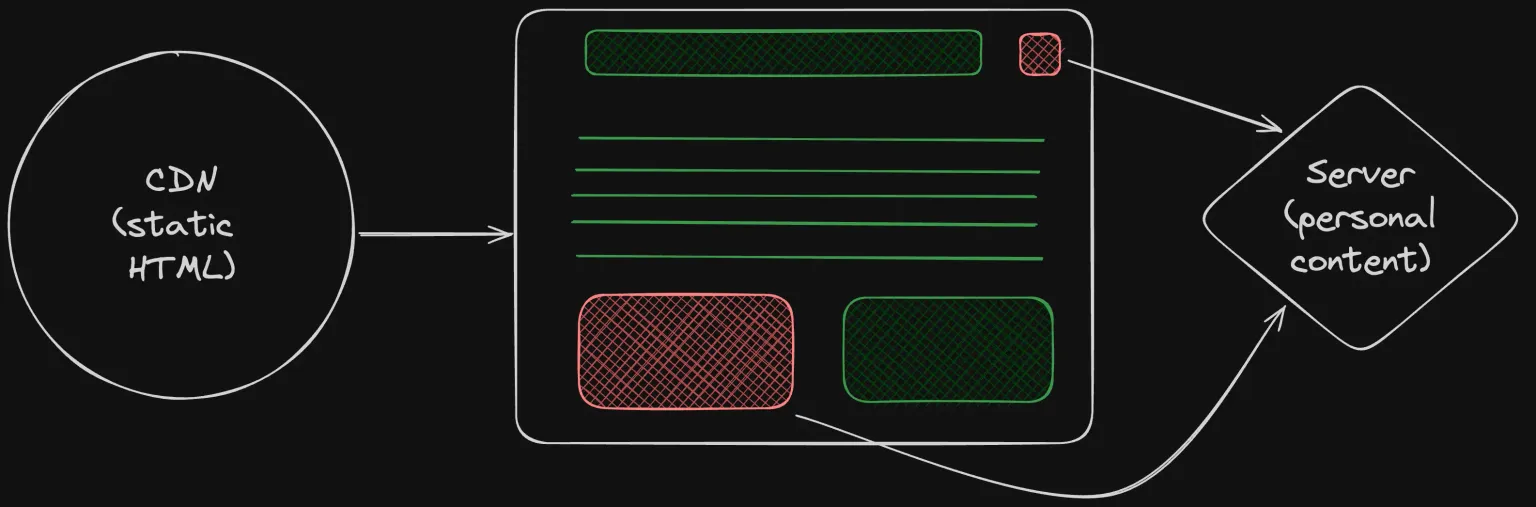

What I want instead is to have the HTML cached on a CDN, and then have that users browser kick off a new request to my origin server to "fill in the holes" with dynamic content.

Sound familiar???

That's literally Astro Server Islands!

My take is that for the non-homelabbers, Next.js partial pre-rendering is a better solution. If you're able to start the request from an edge compute server it'll perform better. If the user needs to make requests to the origin server to get the HTML, then Astro Server Islands are a better solution.

Astro Server Islands

Astro in theory checks all the boxes for a solution here. However, the self hosting solution uses the Node adapter, which doesn't allow "splitting" the dynamic and static parts into two separate servers.

It is missing the Next.js assetPrefix feature.

So, if we use the node.js adapter, the one server will both serve the static files and the dynamic content.

To be explicit, the generated HTML will contain this to fetch the server islands:

This is perfect, except the /_server-islands doesn't support a prefix.

Your browser will automatically fill in the same domain as the current context, meaning

we're forced to use https://jakerob.pro/_server-islands/... and cannot use https://api.jakerob.pro/_server-islands/...

In theory, though, this is how an Astro app could work. And if it is achievable, it would be absolutely perfect for the homelab self-hosted website 💯.

So as it stands now, the entire app needs to be run from the same domain.

Hopefully we can get an adapter created that is perfect for this self-hosting use case. Then Astro would be absolutely perfect.

Conclusion

There's not a perfect solution to the homelab self hosted website problem if you a very reactive website. Next.js comes really close, and Astro is missing a good adapter.

But the future looks bright for self hosting with some solid performance!